Data is Wicked

What does that mean for our tools?

Doing data science well is challenging. It takes a village of people from varied backgrounds: data scientists to produce robust insights, data engineers to make the data available and organize it to be useful, product managers and domain experts to help translate the real world into data. All of these roles (and others) bring different concerns to the table — but the shared language they have is the data model; this makes our data model a key leverage point for outsized impact.

Our data model is the most expressive formulation of our problem. It contains what we have measured, how entities relate, and effectively all the semantic meaning we have captured. The data model is the lens through which we view our problems, and imposes constraints in which we may find our solutions. The data model is neither right nor wrong; it is an abstraction of how we can understand our problem.

There are an infinite number of ways we could model data for a problem, and the formulation of a problem is most of the work of solving the problem. Consequently, the capability to iterate both on our understanding of the problem (expressed through a data model) and our solution is critical for discovering solutions.

Data problems are basically wicked problems:

The solution depends on how the problem is framed and vice versa (i.e., the problem definition depends on the solution)

Stakeholders have radically different world views and different frames for understanding the problem.

The constraints that the problem is subject to and the resources needed to solve it change over time.

The problem is never solved definitively.

We can see that these criteria map pretty cleanly to almost all data problems we work on:

Our data model is part of the problem formulation; it is the lens through which we see our problem.

Many stakeholders are often involved (product, product engineering, data science, data engineering, infrastructure, etc) and have different opinions based on different concerns — e.g. a data scientist may care primarily about the faithfulness of their metric while infrastructure engineers may be primarily concerned with cost

Over time as we understand the problem more deeply and the number of people we can engage to help increases, we need to re-think our approaches. E.g. today we might base our metrics on clicks and in the future we may get more sophisticated with metrics that more closely align to customer value

Teams will always maintain and improve the solution — it's only over if the company folds!

Wicked Problems Need Wicked Tooling

There's no getting around it, wicked problems are hard. There's likely no silver bullet, but it's worth thinking about how systems and tooling could aid solving such problems. Let's go through a couple of examples.

Chloe is a data scientist building a recommendation system for books. Chloe's team is using all the latest and greatest infrastructure to promote "best practices" and high-velocity. They have all you need with a feature store, model store, and even one of those new-fangled evaluation stores:

Credit: Aparna Dhinakaran

Chloe starts by taking the existing model and looking at some existing metrics. The model store and evaluation store each have their own UI. She visits the model store UI and finds the model she's looking for along with the latest version and then looks that up in the evaluation store.

Now Chloe needs to understand how she could potentially improve the model; she decides to take a look at some queries that were poorly served. Luckily, the evaluation store has been keeping track of this all along! She can sample through queries that were poorly served. The evaluation store even shows SHAP explanations so Chloe understands what the key drivers of the bad recommendation are.

Chloe identifies new features that could help the model make better predictions in these cases. She starts by defining the feature in a notebook which allows her to cycle between improving the definition and analyzing the properties of the features (missingness, correlation with target, etc).

Now she's ready to put this into the feature store. But the way she wrote her code in the notebook, and the way the feature store expects you to write features is very different. She now has to write a YAML config file, a new python file containing her implementation structured in the right way, and then run a command to generate the feature in the feature store. After some trial and error, she fixes all the issues with her YAML and starts the feature generation process — this usually takes like 20 minutes so she decides to take a walk. When she gets back, the feature is computed and she's ready to pipe it into her model. But now she needs to update the model training pipeline.

Luckily, they are using AwesomeFlow where the model pipeline is composed of well-defined steps. She identifies the step that fetches features from the feature store and updates the config to pull the new feature. She trains a model and finds that her new model doesn't perform much better. She digs deeper and evaluates only on examples she would expect the feature to help. No noticeable difference. She realizes that there was a bug in her feature — and goes right back to the start of her workflow, hoping she'll get it right this time. She finally gets it right and has a model she wants to carry to A/B testing. She puts it into production and tests against a few queries.

Let's contrast this with the analytics experience. Let's say Larry works for a medium sized business in an analytics function. The company is concerned about customer churn, and asks Larry to look into it. Larry fires up his trusted copy of Excel and dives into customer order data. He notices some data issues, and fixes them right in the notebook using a mix of data validation functions, find + replace, and manual entry to identify and repair bad data. He selects certain columns to plot to get a graphical view of what's happening. Once he feels he understands some of the core factors that drive churn, he uses built in statistical functions to build a churn model. At first, he’s not completely satisfied and realizes he is missing a key piece of information: the marketing promotions user engaged in. Unfortunately this is stored in a different dataset, but Larry just downloads it and puts it right into Excel. After joining the data, he has to clean up a few bad records, but everything else is good to go. He cleans up his analysis and prepares a report for stakeholders.

On the surface, these two problems have a lot of commonalities: they both require data cleaning, preprocessing, analysis, and modeling. But then why do we have two very different experiences?

Admittedly, part of the challenge is that Chloe's situation requires higher "production engineering quality" than Larry's. But I think there's something deeper here: Chloe spent a lot of time on incidental problems: changing tools, waiting for things to compute, infrastructure issues, etc while Larry spent all his time on essential problems: i.e. the activities that really moved the needle.

Somehow, it seems that Larry's tooling was more fit to solve wicked problems than Chloe's. It's worth asking: what makes tools good for solving wicked problems and are we building our tools in that manner? To be clear, I'm not trying to attack infrastructure designs like feature stores, model stores, evaluation stores, etc — I really do think these are exciting developments in the space. But I have to ask, have we missed something about the end-to-end workflow here? In figuring out where to make dividing lines for our abstractions, we have kind of broken the development workflow into pieces. How can we reunite it?

Taming the Wicked in Analytics

To understand how we might fix the broken workflow in our existing tools, we can look at how Excel works so well. There are clearly many reasons folks would claim Excel is not a good choice like:

stability: if anyone can edit it, its hard to ensure it keeps running

versioning: if a bad change was made, how do we know how to revert?

provenance: we may need to understand who (or what) edited what, especially for compliance

scale: our data might not fit into Excel's 1,048,576 row limit

But if there are so many reasons not to use Excel, why do people keep using Excel?

People refuse to stop using Excel because it empowers them and they simply don't want to be disempowered. - Gavin Mendel Gleason

If we are really honest with ourselves, these "problems" with Excel are accidental rather than essential complexity. Although none of them are a core problem of your business, many of us have come to believe they are essential (aside: we can acknowledge that in some cases there are laws that shift some of these concerns into 'essential' territory).

Recall the key characteristics of (wicked) data problems:

The solution depends on how the problem is framed and vice versa (i.e., the problem definition depends on the solution)

Stakeholders have radically different world views and different frames for understanding the problem.

The constraints that the problem is subject to and the resources needed to solve it change over time.

The problem is never solved definitively.

We can see that the reasons people avoid Excel don't actually help with these aspects. What then are the qualities of Excel that make it good for solving data problems? I propose two aspects:

Excel is

iterable: you can quickly iterate on your formulation and solution many times, taking into account new information as it arrives; you can literally change your data right in Excel

accessible: you can share a spreadsheet with basically anyone and they will be able to view it — and most people can do some basic operations in Excel

And we can see similar qualities in many other analytics tools such as Tableau, Mode, etc. Many analytics tools promote accessibility and iterability, but machine learning tools largely have largely missed the mark.

Not to say analytics has solved the problem; even analytics has challenges with accessibility because we focus so much on technical ability but "analytics is not primarily technical". However, I'm optimistic that we are making progress in this area given the rise of roles like analytics engineers, but the shift for data science seems less mature. We are certainly missing out on amazing data science when we expect data scientists to know k8s.

Wicked tools meet people where they are and help them iterate to better solutions.

Machine Learning is Hard because Data is Wicked

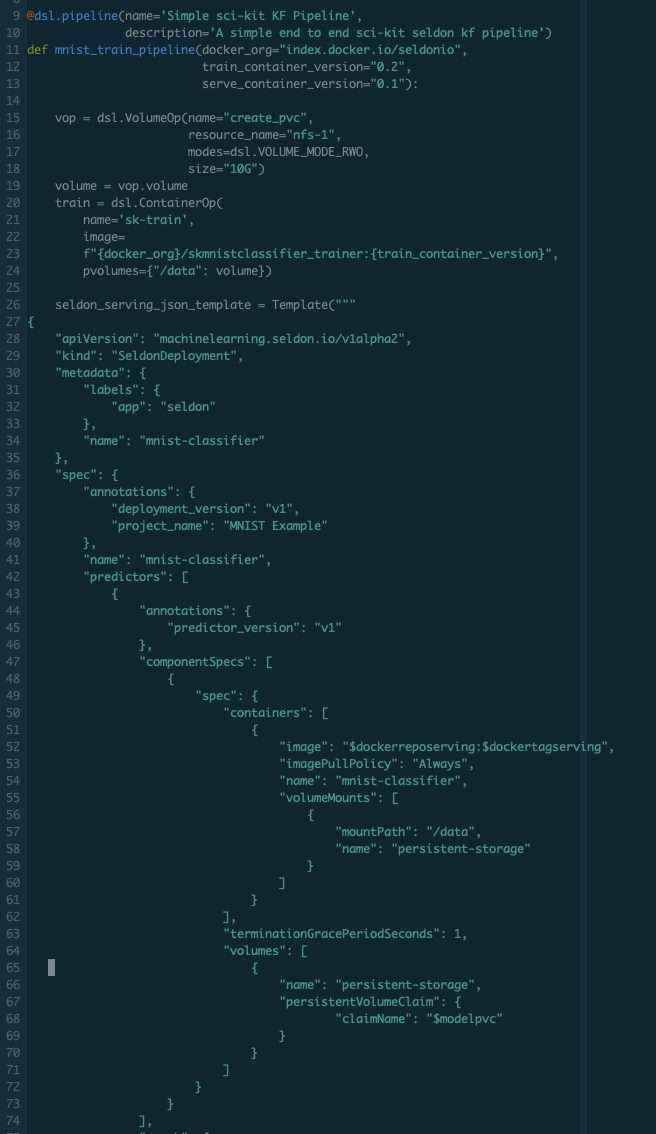

If we look at existing machine learning tools, we can see how much friction they have brought to the workflow of a data scientist. Kubeflow abstracts a lot of spinning up of infrastructure, but is a data scientist really going to write their code like this:

and between each change click 'run' to see the results? Probably not. Machine learning tools have fetishized the 'last mile problem' (i.e. productionizing) so much that they left the experimental workflow behind, creating a chasm between how data scientists 'naturally' work and how they need to do it to put it into production. How about hooking together the 5 different tools you need to have a complete workflow? You need a feature store, hyperparameter tuning, experiment tracking, model store, model monitoring, etc. And these tools rarely (if ever) have turn-key integration — they are generally integrated with lots of effort. In fact, many of them have their own bespoke web UIs. It seems that in our efforts to focus on smaller problems, we broke the workflow.

I'm certainly not the first person to notice, Erik Bernhardsson has acknowledged that our tooling is bad in the data space and that the fragmentation in the space shows that there's opportunity to build a more cohesive solution. Chip Huyen has demonstrated the problems with data scientists needing to know kubernetes. Jacopo Tagliabue has shown that many of us should be doing ML at a modest scale without going overboard on infrastructure. And there are many others echoing the same sentiment.

Don't get me wrong — I think there's a lot of great and important work that many ML tools companies are driving. But when I look at the 'hello world' for Kubeflow above, I get worried that we are often optimizing the wrong things. This is not to say that Kubeflow is bad necessarily, but that it is a poor fit on its own for what many people are using it for: a data science platform. It's clear the tools do not yet have a standard form, and platforms like this are a 'lowest common denominator'.There's a higher-level abstraction we've yet to find. But I often worry we may have missed the forest for the trees. In all the concern about getting things to scale, we may have forgotten some of the other important properties; i.e. accessibility and iterability.

There's many products on the market, and many will have you believe they have solved the production data science problem. But most of them have missed the mark because they don't understand that we are working on wicked data problems.

These accessible, iterative data platforms will bring in more domain experts—allowing us to more directly distill their expertise into our data. But most importantly, allowing everyone to better bring the most of their experience to bear.

Building Wicked Tools

If we want to build better machine learning tools, we need to get serious about accessibility and iterability. But how do we do that?

Accessibility starts with the right abstraction(s). Excel's central abstraction is that of a spreadsheet or spreadsheet cell. There are certainly folks who remember the paper spreadsheets which Excel replicates. However, we should also give credit to the accessibility of the virtual spreadsheet as an abstraction. Almost anyone can grok what it is! If the core abstractions of your tools aren't as easily grokked as a spreadsheet, you will inherently have an accessibility problem.

But there are many complex operations you can do in Excel too! That's part of what makes Excel a great tool. And clearly, many of these are less accessible than the central spreadsheet abstraction. This is part of what makes Excel so powerful. It offers continuums of control. In many tools, there's one level of control, and when your experience or use case don't fit that level of abstraction perfectly, you likely can't use the tool! But with Excel, you can use it for data entry, data cleaning, data visualization, etc or all of it. The accessibility is through the roof!

Picking the right abstractions to support a continuum of control is how to maximize accessibility for your tool. There are more questions than answers here, but Ken Yocum has an interesting proposal for layers of an AI platform and folks like Jeremy Howard from FastAI have promoted the thought of a 'mid level API'. These ideas both get at allowing people work in your tools at different levels of abstraction.

Iterability starts with tight feedback loops. The more tools I need to tab through to get a full picture of the workflow, the more cognitive overhead each iteration will take. Obviously, its a fool's errand to attempt to build one tool that does everything. However, we can leverage existing interfaces and user experiences by meeting the user where they are. Many in the data community are rejecting the notion that "notebooks aren't production": Netflix productionizes Notebooks via Papermill, FastAI builds complete libraries in notebooks with nbdev, and Databricks allows scheduling notebooks as jobs.

The best way to promote iterability are to build with an SDK/API first mindset. Don't pretend you are offering a turn-key solution because you probably are not. Instead, expose your SDK/API, then build great experiences right in the tools your users are using today — whether that's Jupyter Notebooks, a terminal, a streamlit app or whatever the crazy kids are using these days.

The Future

Ultimately, the future of data science platforms is all about solving wicked data problems which means we need to focus more seriously on accessibility and iterability. Such a focus will allow us to bring more domain expertise to bear, communicate more effectively with stakeholders, and continually iterate on solutions in the face of changing needs. Future tools will:

empower many stakeholders with a layered API approach

plug into existing tools/workflows by being SDK/API first

be more declarative (like data warehouses with SQL are today)

While I think there's a lot of misguided use of existing tools and infrastructure, I have a lot of reasons to be optimistic. Databricks has a unified analytics platform — integrating many data roles into a single product. Products like Watchful and SnorkelFlow are bringing compelling experiences to integrate more domain expertise into machine learning workflows. Sisu is expanding the capabilities of analysts with machine learning. And projects like nbdev and FastAI are showing us how to bring more iterative workflows and accessibility to machine learning.

Data will always be wicked, so the future of data science platforms must be iterative and accessible.